Museu do Amanhã in Rio de Janeiro, Brazil, is one of a very small cadre of museums experimenting with chatbots powered by artificial intelligence (AI). (Well, that seems appropriate. They are the Museum of Tomorrow, after all!) Curator Luiz Alberto Oliveira, has described the museum’s objective as constructing “a sequence of experiences in which visitors can gradually acquire the means and resources to live out the possibilities of tomorrow that are opening up today.” In their latest project, the museum has partnered with IBM’s artificial intelligence service staff to create IRIS+, an AI that accelerates that connection. In this first of two posts exploring IRIS+, Daniel Morena, founder and creative technologist at of 32Bits, describes the program, how it operates, and how it fits into the overall museum experience. In the second post, Eduardo Carvalho and Leonardo Menezes of the Museum of Tomorrow will join Daniel to talk about how they and their colleagues approached the design of an AI chatbot that would literally become the voice of the museum. –Elizabeth Merritt, Center for the Future of Museums

Say Hello to IRIS+

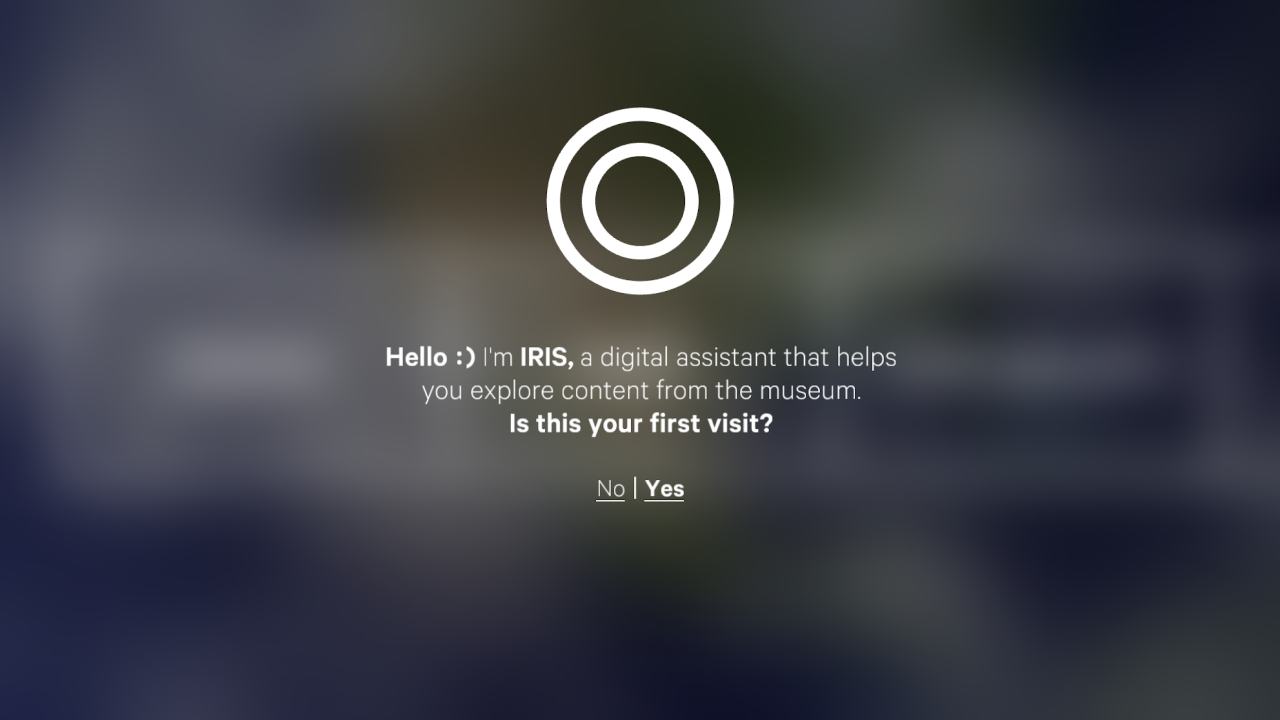

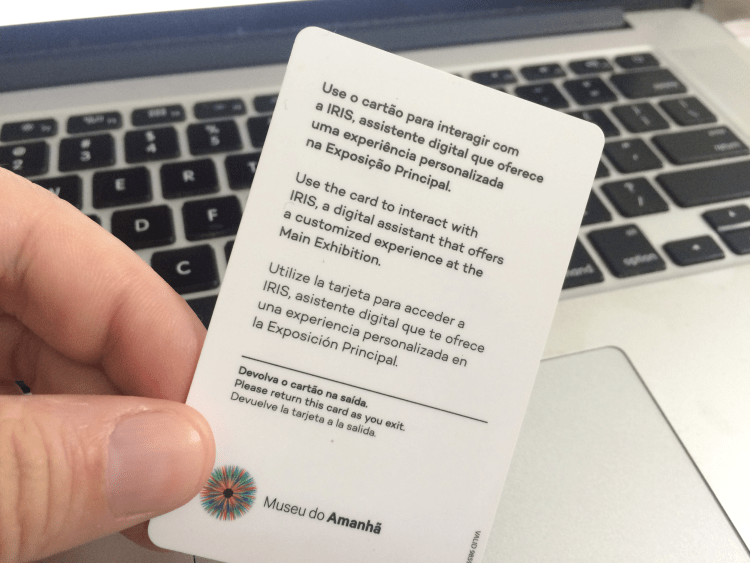

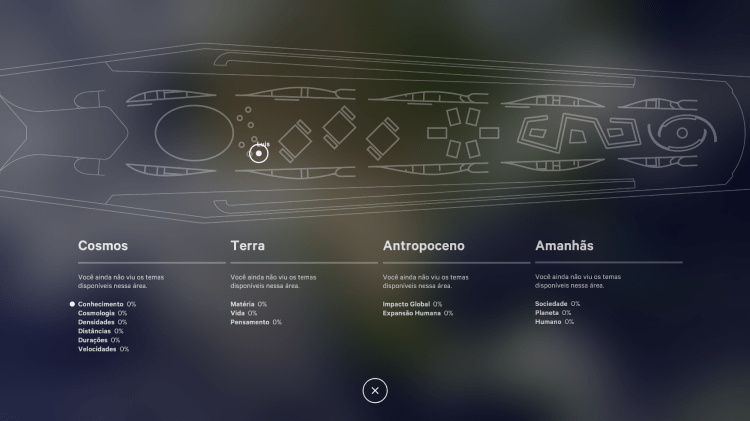

IRIS+ is a new experience integrated into the main exhibition of the Museum of Tomorrow in Rio de Janeiro, Brazil. When the museum opened in 2015, its original digital assistant, IRIS, was already a core part of the experience. Through IRIS, each visitor uses a chipped card to compile and personalize their experience as they interact with exhibit components throughout the galleries. IRIS+ takes this one step further, using artificial intelligence to make meaning of the data collected via these interactions, to engage with visitors via a conversational interface, and to connect visitors to social and environmental initiatives that will help them build a more inclusive and fair tomorrow for all.

IRIS

When my colleagues and I at 32Bits were invited to design and program IRIS+, we were actually continuing the IRIS project we had started in 2014 with Leonardo Menezes, Content Director at Museu do Amanhã. We proposed the name IRIS as a reference to the museum’s logo, which resembles a human iris. It was a short, catchy name that helped us to create a personality for the museum. It has proven to be a good name for the digital entity which embodies all the characteristics that the curatorial proposal defined for the museum: an intelligent organism which is always up-to-date, able to observe and understand the needs of its visitors, as well as helping them navigate through the more than 2000 pages of content the museum offers. The assistant we built provides all this, as well as suggesting content not yet explored, and engaging in personalized dialogs in order to create a tailor-made experience for each visitor. (Following photos by Tiago Morena):

Hi, IBM Watson!

IRIS+ is an innovative application of the IBM Watson services as an artificial intelligence platform. In general, AI applications are designed to answer your questions, your commands, and perform tasks. IRIS+ takes the opposite approach. Her interaction with visitors, after a brief introduction, begins with a question, a provocation: “After everything you learned in the main exhibition, what are your concerns in today’s world?” That question sparks a conversation in which visitors can talk about their concerns. Throughout the dialog, the application exchanges data with the IBM cloud, and uses the Watson Conversation service to continually extract meaning from and drive the conversation. At the end of the dialog, it suggests initiatives through which the visitor can take action to address their concerns.

This photo essay illustrates this interaction (photos by Nancy Torres):

Training Watson

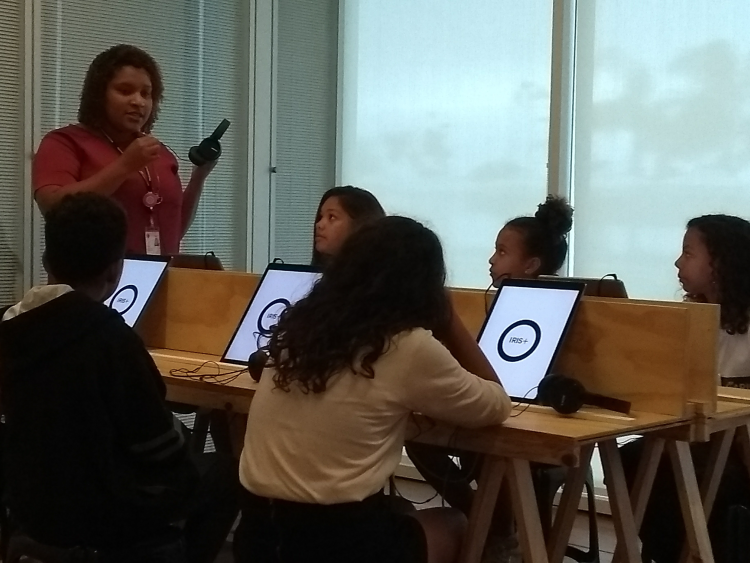

The IRIS+ project was the first time that Watson Services were used to ask open-ended questions and accept unconstrained answers. Although visitors are prompted to talk about the museum’s topics, they can give any answer, which made training the IA a much more complex task. Also, we had to train Watson on Portuguese, and expand its vocabulary! Training the conversation service to deal with any kind of response, and discuss the various subjects covered in the museum, took about 4 months and involved the analysis of numerous conversations with hundreds of volunteers.

The first stage of the training was conducted through an email that prompted visitors to talk about their concerns, similar to the prompt provided in the final application. This helped us select an initial list of subjects and concerns, shaping the dialog structure of the final app. After this initial phase, we built a prototype application using the simplest possible interface—just enough to support the next stage of training, in which the dialog was refined and adjusted until it was as natural as possible. That process involved our team, the curator, and the museum content, communication, public development and IT teams working alongside IBM consulting staff.

In this phase, the IBM team also created a custom input system where we register and describe social and environmental initiatives run by a variety of organizations and link them to our list of visitor concerns. The system makes this data available through an REST API to be consumed by the IRIS+ final applications, making them auto-updatable.

All the interactions that the visitors had via the graphical interface were recorded, allowing us to know the total time of interaction and data completion and how long the information took to travel to and return from the Watson service before it’s ready to be displayed. This data allowed us to refine and adjust the user experience as a whole, tweaking parts that were boring or tiresome to make conversing with IRIS+ as pleasant as possible. We did about 700 tests before Iris+ was released to the public with a voice interface. We wanted Watson to understand even broad answers, from 10 words to up to 45 seconds of speech.

Accessibility matters

For the staff at 32bits, IRIS+ reflects our view that exhibitions and interactive installations can and should be based on the principles of universal design. From the beginning of the project, there was a desire to provide a good experience for visitors with disabilities. Because the default interaction with IRIS+ is verbal, via spoken words, people with visual impairments can use the interactive with minimum support from the museum staff. However, it is possible to push a button and use a version of the interface with text inputs, serving hearing-impaired visitors.

Suggesting an initiative

The “initiatives suggestion feature” is powered by an algorithm which uses a grading system to classify and correlate our concerns to the initiatives. For example, let’s say that during a dialog, a user responds that what worries them most in today’s world is violence. In response, IRIS+ asks “Violence is a very broad issue. What kind of violence are you most concerned about?” The user might respond by saying “Violence against women …” which prompts IRIS+ to talk about the subject a little bit, and then ask: “And what can we do to change that?” The visitor then says what he or she thinks is the right thing to do.

This kind of dialog generates a lot of information, which the application processes and uses to classify how confident it is about what has been processed. For example, IRIS+ might classify the dialog above as:

- 90% certainty that the dialog was about violence in general,

- 98% sure about violence against women and

- 10% sure about transphobia.

With this in hand, the program compares this tagged data with the pre-existing classifications of available initiatives. An NGO on urban violence, for example, will win a point, but an NGO which supports women who have suffered violence from their partners is awarded additional points for being even more relevant to the conversation.

When interacting with IRIS and later with IRIS+, visitors are invited to give their personal information, such as name, email, date of birth, and where they live. That lets us refine the suggestions, finding initiatives closer to them and best suited for their age (the museum receives a lot of visits from children). Finally, considering all of the given data, we weigh the most appropriate and geographically accessible initiatives, and suggest up to three with high relevance and a strong chance of engagement, in order to increase the chance visitors will follow through and take action.

Data Visualization

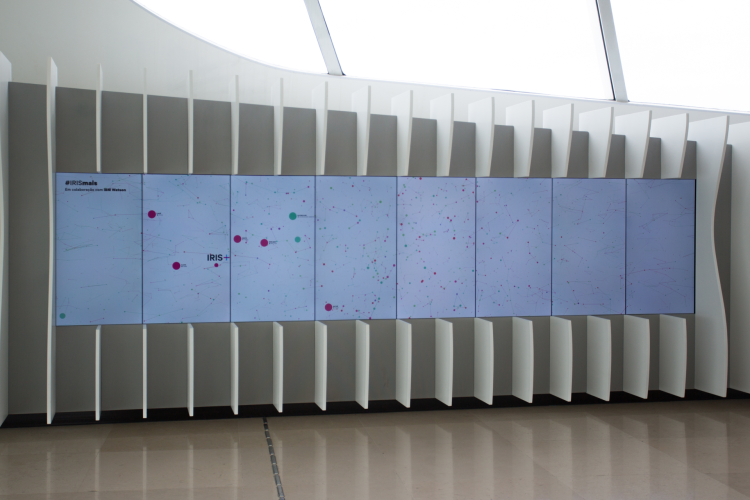

All information collected from visitors is stored by the museum’s internal system, Cerebro, and presented to the public on a large wall comprised of eight 55” screens arranged side by side. The visualization of the collected data shows up as a large constellation of colored particles that connect randomly with each other, with a subtle, continuous motion. The total resolution of this compound screen is 8640×1920 pixels and it’s driven by two synchronized servers. Up to six visitors can interact simultaneously and continuously through applications installed in the iPads. All the devices and servers are connected through a network.

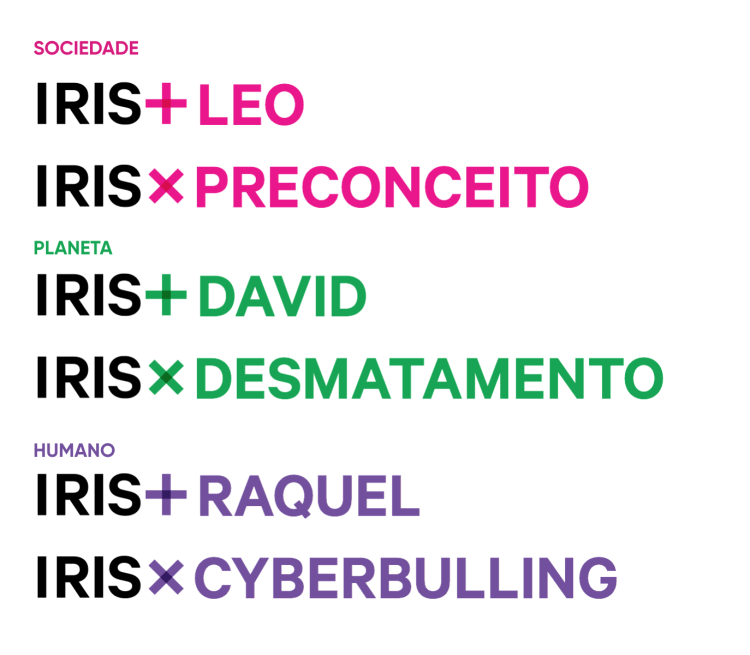

The constellation described above represents the visitors who participated in the experiment. This visualization software which we developed presents the last 5000 visitors on screen as individual particles. The color of each particle corresponds to the signature colors associated with each of the major themes that make up the museum’s section of “tomorrows.” These themes are grouped around our relationship to the Planet, to our Society, and the Human condition (limits and evolution). Each theme is identified with a specific chromatic spectrum. So, just by looking at the main colors presented on the constellation, before we even see numbers, we can have the sense what is most concerning the visitors at that time.

The visitor’s concerns, when they are saved in the system, are classified as “subjects we are against,” “subjects we would like to preserve or see more of,” or “subjects we are reflecting on.” These positions are represented, respectively, by the symbols “x”, “+” or “&.”

To increase engagement, at the end of the dialog and recommendations, IRIS+ invites visitors to use the iPad to take a selfie and post it to the visualization wall. If a visitor agrees, the wall presents an animation that shows visitor’s photo and modifies its background color to match the theme identified in the visitor’s dialog. The name IRIS+ is displayed next to the visitor’s name together with their main concern, creating a new meaning. Putting together those fragments, we create phrases such as “IRIS + [visitor name] x violence” or “IRIS + [visitor name] + empathy”.

At the same time, the display reorganizes the constellation as a sphere. Highlighted particles in this sphere represent all visitors who share the same concerns as this visitor. Others particles, in the background, represent all visitors with different concerns. This creates an animated infographic that visualizes in a simple and bold way, how one visitor’s particular concern relates to all others. The visitor, also represented by a particle, sees that he or she is not alone, but is instead part of something greater. As complementary information, in the last screen of the group, we show in numbers and graphs, the 3 main concerns of all visitors, by theme.

Keep an eye on the CFM blog for IRIS+: part 2, in which museum staff will discuss how they decided what kind of personality their AI chatbot should have, and how it should behave in order to demonstrate the museum’s values about sustainability, tolerance, and inclusion.

Daniel Morena is the founder and creative technologist ahead of 32Bits, the interactive design company responsible for the development of some of the most well-known interactive installations in Brazil, such as the “Alley of Words” in the Museum of Portuguese Language, “Art that Reveals History” in Catavento Cultural Institute, “Livro-Obra” (an application for iOS that digitally recreates Lygia Clark’s homonymous work), as well as “IRIS” and “IRIS+” at the Museum of Tomorrow. He has been working for almost 20 years, creating dialogs between art, culture, design, and technology, and it is responsible for the studio technological research and digital products. Since 2018, he lectures about Experience Design in Entertainment Design Master course held by IED Rio (European Design Institute).